I’m going to start this by saying that you can blame/thank* (*delete as appropriate) a colleague of mine for this post, after a conversation we had last week.

For the studies I carried out for my PhD I sought end users to help me to improve the design of a software, with the noble aim to, at worst, make their working life more productive and, at best, more enjoyable. On more than one occasion, however, I found myself asking them to show me how they carried out tasks so that we could essentially design the system to replicate it. Was this desirable and ethical, given the cost of the software and the amount of time users had taken to acquire skills and tacit knowledge of their workplace? In other words, were we asking them to help us design a product that would put them out of a job? I was often unsure. The software was difficult to learn, which seemed unsurprising and unavoidable given its context of use, but we (implicitly) persisted to seek a universally-usable design. On the surface this appeared to be a win-win objective: more people would be able to use it, which would simultaneously reduce the number of requests expert users receive from their colleagues without the time or inclination to learn it (freeing up their time for their own work), and potentially increase contract renewals. However, in other respects I perceived (rightly or wrongly) that I was indirectly and unwittingly stirring up workplace politics and impacting the credibility and security of the end users’ role in their workplace.

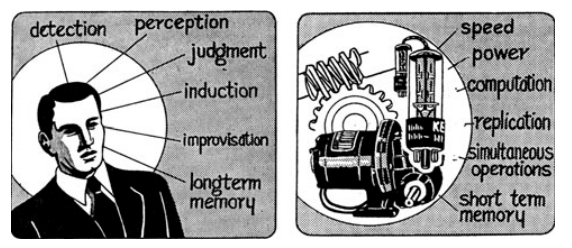

I was relieved to find last week that I am not the first person to question the implications of the trend for computers to replace humans in the workplace and the dynamics of socio-technical systems. By no means can I (or indeed) will I ignore ethical issues now that I am designing a system to be used by a faceless army of volunteers; it is in fact in my interest to tackle them head on, if I want their engagement and optimum performance. By way of this recent article in New Scientist and the aforementioned conversation I had last week, I’ve stumbled across Functional Allocation Theory. Long before the era of unexpected items in baggage areas, Paul Fitts dared to declare and classify functions that machines perform better than humans, and functions that humans perform better than machines, in 1951. The history of what is known as “Fitts List” is articulately reviewed by de Winter and Dodou (2014), so I don’t intend to detail it here, other than to point out that Bainbridge later developed these ideas into her narrative on the “Ironies of Automation“.

Also known by the memorable acronym MABA-MABA (Men are better at, machines are better at). Published in: Fitts PM (ed) (1951) Human engineering for an effective air navigation and traffic control system. Published by: National Research Council, Washington, DC.

This work directly challenged any assumption that automation is always desirable and that unreliability and inefficiency are grounds upon which to justify the elimination of human operators from systems. The division of responsibility between humans and computers based on performance, in the tradition of Fitts, precludes the possibility for human-computer collaboration and wrongly assumes that the human operator’s performance (manifested in their effectiveness and efficiency) is constant. Machines follow programmed rules of operation in a way that humans do not; human performance is unpredictable and inextricably tied to their skill and motivation, which evolve over time. Noteworthy here is the concept of “autonomy”, the level of which a human operator has within a system can also directly feed back into their performance, especially in safety-critical situations. Too much can be stressful, which leads to errors, and too little can result in low job satisfaction and similarly poor health and well-being, and consequently performance (assuming that they are not so demotivated and ill that they do not turn up for work in the first place).

Although iMars adds another ingredient to this cocktail, the crowd, it helps me to work towards a “Goldilocks” level of autonomy for our volunteers, in the same way that we sought to design a product that would keep existing users and attract new ones during my PhD. iMars amalgamates images of Mars taken over 40 years. A lot of images have been taken in that time, amongst which only a selection will show changes in geological features. The change detection algorithm the project produces can never hope to detect these patterns as well as the volunteers, but we can refine the skill with which it sifts through the images to find ones with change, and improve the certainty with which we know the crowd classifies images in which there has been geological change. Since we cannot visit the surface of Mars to ascertain the validity of the crowd’s classifications, this level of confidence will only be relative, but it’s one way in which we can affect crowd-computer collaboration.